AI Demo说明文档

1. AI Demo开发框架介绍

1.1. AI Demo开发框架

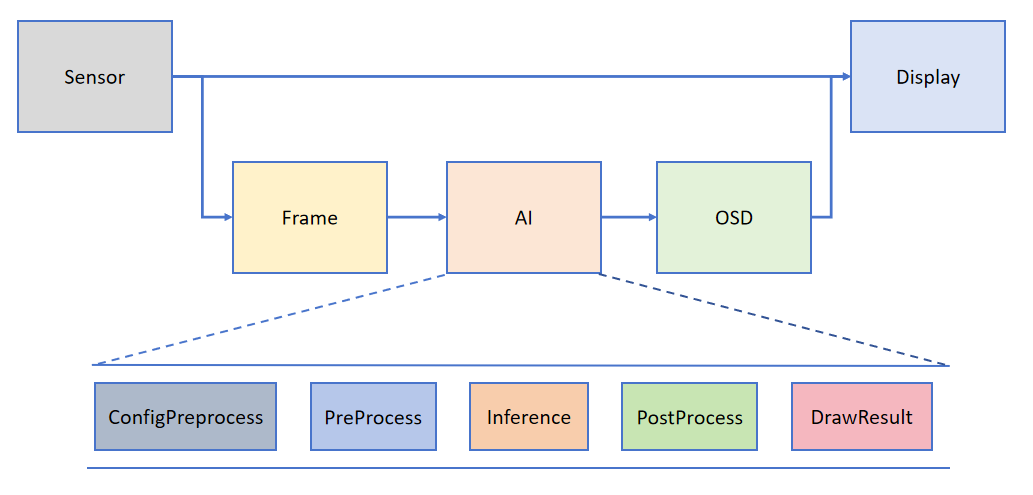

为了帮助用户简化AI部分的开发,基于K230_CanMV提供的API接口,搭建了配套的AI 开发框架。框架结构如下图所示:

Camera默认出两路图像,一路格式为YUV420,直接给到Display显示;另一路格式为RGB888,给到AI部分进行处理。AI主要实现任务的前处理、推理和后处理流程,得到后处理结果后将其绘制在osd image实例上,并送给Display叠加显示。

1.2. 接口介绍

1.2.1. PipeLine

我们将Media部分的代码封装在PipeLine类型中,通过固定的接口实现整个流程操作。

其中PipeLine类提供的接口包括:

-

初始化参数包括:

(1)rgb888p_size:list类型,预设给到AI部分的图像分辨率;如rgb888p_size=[1920,1080]。

(2)display_size:list类型,显示部分Display的分辨率;如display_size=[1920,1080]。

(3)display_mode:str类型,显示模式,包括”hdmi“和”lcd“;如display_mode=”hdmi“。

(4)debug_mode:int类型,耗时调试模式,如果大于0,打印操作耗时;如debug_mode=0。

-

create(sensor=None,hmirror=None,vfilp=None):

(1)sensor:参数为可选参数,类型为Sensor对象,可自主配置现有CanMV、01Studio和k230d zero开发板实现了自动探测,可以默认使用create()实现。

(2)hmirror:默认为None,当主动设置时为bool类型(True/False),表示是否实现水平方向镜像显示。

(3)vflip: 默认为None,当主动设置时为bool类型(True/False),表示是否实现垂直方向翻转。

-

get_frame():返回一帧ulab.numpy.ndarray类型图像数据,分辨率为rgb888p_size,排布为CHW。

-

show_image():PipeLine实例中预设一帧OSD图像,该接口将成员变量osd_img显示在屏幕上。

-

destroy():销毁PipeLine实例。

下面给出无AI部分的示例代码:

from libs.PipeLine import PipeLine, ScopedTiming

from media.media import *

import gc

import sys,os

if __name__ == "__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="hdmi"

if display_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

# 初始化PipeLine,用于图像处理流程

pl = PipeLine(rgb888p_size=[1920,1080], display_size=display_size, display_mode=display_mode)

pl.create() # 创建PipeLine实例

try:

while True:

os.exitpoint() # 检查是否有退出信号

with ScopedTiming("total",1):

img = pl.get_frame() # 获取当前帧数据

print(img.shape)

gc.collect() # 垃圾回收

except Exception as e:

sys.print_exception(e) # 打印异常信息

finally:

pl.destroy() # 销毁PipeLine实例

上述代码中,通过pl.get_frame()接口获取一帧分辨率为rgb888p_size的图像,类型为ulab.numpy.ndarray,排布为CHW。基于上面的代码得到了一帧图像给AI处理,您可以只关注AI推理部分的操作。

图像AI开发过程包括:图像预处理、模型推理、输出后处理的过程,我们将整个过程封装在Ai2d类和AIBase类中。

1.2.2. Ai2d

对于Ai2d类,我们给出了常见的几种预处理方法,包括crop/shift/pad/resize/affine。该类别提供的接口包括:

- 初始化参数包括:

(1)debug_mode:int类型,耗时调试模式,如果大于0,打印操作耗时;如debug_mode=0。

- set_ai2d_dtype(input_format,output_format,input_type,output_type)

(1)input_format:ai2d预处理输入格式。

(2)output_format:ai2d预处理输出格式。

输入输出格式支持如下所示:

enum class ai2d_format

{

YUV420_NV12 = 0,

YUV420_NV21 = 1,

YUV420_I420 = 2,

NCHW_FMT = 3,

RGB_packed = 4,

RAW16 = 5,

}

| 输入格式 | 输出格式 | 备注 |

|---|---|---|

| YUV420_NV12 | RGB_planar/YUV420_NV12 | |

| YUV420_NV21 | RGB_planar/YUV420_NV21 | |

| YUV420_I420 | RGB_planar/YUV420_I420 | |

| YUV400 | YUV400 | |

| NCHW(RGB_planar) | NCHW(RGB_planar) | |

| RGB_packed | RGB_planar/RGB_packed | |

| RAW16 | RAW16/8 | 深度图,执行shift操作 |

(3)input_type:输入数据类型。

(4)output_type:输出数据类型。

下面是接口调用示例:

from libs.AI2D import Ai2d

import nncase_runtime as nn

my_ai2d=Ai2d(debug_mode=1)

my_ai2d.set_ai2d_type(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8)

my_ai2d.set_ai2d_type(nn.ai2d_format.RGB_packed, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8)

-

crop(start_x,start_y,width,height):预处理crop函数。

(1)start_x:宽度方向的起始像素,int类型;

(2)start_y: 高度方向的起始像素,int类型;

(3)width: 宽度方向的crop长度,int类型;

(4)height: 高度方向的crop长度,int类型;

my_ai2d.crop(0,0,200,300)

- shift(shift_val):预处理shift函数。

(1)shift_val:右移的比特数,int类型;

my_ai2d.shift(2)

- pad(paddings,pad_mode,pad_val):预处理padding函数。

(1)paddings:list类型,各维度两侧padding的大小,对于4维的�图像(NCHW),该参数包含8个值,分别表示N/C/H/W四个维度两侧的padding大小,一般只在后两个维度做padding;

(2)pad_mode:只支持constant padding,直接设为0;

(3)pad_val:list类型,每个像素位置填充的值,比如[114,114,114]、[0,0,0]

my_ai2d.pad([0,0,0,0,5,5,15,15],0,[114,114,114])

- resize(interp_method,interp_mode):预处理resize函数。

(1)interp_method:resize插值方法,ai2d_interp_method类型,包括:nn.interp_method.tf_nearest、nn.interp_method.tf_bilinear、nn.interp_method.cv2_nearest、nn.interp_method.cv2_bilinear;

(2)interp_mode:resize模式,ai2d_interp_mode类型,包括:nn.interp_mode.none、nn.interp_mode.align_corner、nn.interp_mode.half_pixel;

my_ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

- affine(interp_method,crop_round,bound_ind,bound_val,bound_smooth,M):预处理affine函数。

(1)interp_method:Affine采用的插值方法,ai2d_interp_method类型,包括:nn.interp_method.tf_nearest、nn.interp_method.tf_bilinear、nn.interp_method.cv2_nearest、nn.interp_method.cv2_bilinear;

(2)cord_round:整数边界0或者1,uint32_t类型;

(3)bound_ind:边界像素模式0或者1,uint32_t类型;

(4)bound_val:边界填充值,uint32_t类型;

(5)bound_smooth:边界平滑0或者1,uint32_t类型;

(6)M:仿射变换矩阵对应的vector,仿射变换为Y=[a_0, a_1; a_2, a_3] \cdot X + [b_0, b_1] $, 则 M=[a_0,a_1,b_0,a_2,a_3,b_1 ],list类型。

affine_matrix=[0.2159457, -0.031286, -59.5312, 0.031286, 0.2159457, -35.30719]

my_ai2d.affine(nn.interp_method.cv2_bilinear,0, 0, 127, 1,affine_matrix)

- build(ai2d_input_shape,ai2d_output_shape):ai2d构造函数,前面配置的预处理方法起作用。

(1)ai2d_input_shape:ai2d输入shape,list类型;

(2)ai2d_output_shape:ai2d输出shape,list类型;

my_ai2d.build([1,3,224,224],[1,3,512,512])

- run(input_np):调用配置好的ai2d进行预处理的函数,返回一个tensor类型数据,可以直接给模型使用,也可以通过to_numpy()转换成ulab.numpy.ndarray类型的数据。

(1)input_np:ulab.numpy.ndarray类型,ai2d预处理的输入数据,shape和build函数中设置的ai2d_input_shape一致。

注意:

(1) Affine和Resize功能是互斥的,不能同时开启; (2) Shift功能的输入格式只能是Raw16; (3) Pad value是按通道配置的,对应的list元素个数要与channel数相等; (4) 当配置了多个功能时,执行顺序是Crop->Shift->Resize/Affine->Pad, 配置参数时注意要匹配;如果不符合该顺序,需要初始化多个Ai2d实例实现预处理过程;

下面是一个完整的示例:

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AI2D import Ai2d

from media.media import *

import nncase_runtime as nn

import gc

import sys,os

if __name__ == "__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="hdmi"

if display_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

# 初始化PipeLine,用于图像处理流程

pl = PipeLine(rgb888p_size=[512,512], display_size=display_size, display_mode=display_mode)

pl.create() # 创建PipeLine实例

my_ai2d=Ai2d(debug_mode=0) #初始化Ai2d实例

# 配置resize预处理方法

my_ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

# 构建预处理过程

my_ai2d.build([1,3,512,512],[1,3,640,640])

try:

while True:

os.exitpoint() # 检查是否有退出信号

with ScopedTiming("total",1):

img = pl.get_frame() # 获取当前帧数据

print(img.shape) # 原图shape为[1,3,512,512]

ai2d_output_tensor=my_ai2d.run(img) # 执行resize预处理

ai2d_output_np=ai2d_output_tensor.to_numpy() # 类型转换

print(ai2d_output_np.shape) # 预处理后的shape为[1,3,640,640]

gc.collect() # 垃圾回收

except Exception as e:

sys.print_exception(e) # 打印异常信息

finally:

pl.destroy() # 销毁PipeLine实例

1.2.3. AIBase

AIBase部分封装了实现模型推理的主要接口,也是进行AI开发主要关注的部分。用户需要按照自己demo的要求实现前处理和后处理部分。

AIBase提供的接口包括:

- 初始化参数包括:

(1)kmodel_path:str类型,kmodel路径,用于初始化kpu对象并加载kmodel;

(2)model_input_size:list类型,可选,模型输入分辨率,在单输入时起作用,格式为[width,height],如:model_input_size=[512,512];

(3)rgb888p_size:list类型,可选,AI得到的图像的分辨率,在单输入时起作用,格式为[width,height],如:rgb888p_size=[640,640];

(4)debug_mode:int类型,耗时调试模式,如果大于0,打印操作耗时;如debug_mode=0。

- get_kmodel_inputs_num():返回当前模型的输入个数;

- get_kmodel_outputs_num():返回当前模型的输出个数;

- preprocess(input_np):使用ai2d对input_np做预处理,如果不使用单个ai2d实例做预处理,需要在�子类重写该函数。

(1)input_np:ulab.numpy.ndarray类型,ai2d预处理输入数据;

(2)返回tensor列表;如果该方法重写,请注意返回类型:tensor类型的列表;

- inference(tensors):对预处理后得到的kmodel的输入(类型为tensor)进行推理,得到多个输出(类型为ulab.numpy.ndarray);

(1)tensors:列表类型,模型的输入,可以是一个可以是多个;

(2)返回ulab.numpy.ndarray类型的列表;

Tips:

Image对象转ulab.numpy.ndarray:

import image

img.to_rgb888().to_numpy_ref() #返回的array是HWC排布ulab.numpy.ndarray转Image对象:

import ulab.numpy as np

import image

img_np = np.zeros((height,width,4),dtype=np.uint8)

img = image.Image(width, height, image.ARGB8888, alloc=image.ALLOC_REF,data =img_np)ulab.numpy.ndarray转tensor类型:

import ulab.numpy as np

import nncase_runtime as nn

img_np = np.zeros((height,width,4),dtype=np.uint8)

tensor = nn.from_numpy(img_np)tensor 类型转ulab.numpy.ndarray:

import ulab.numpy as np

import nncase_runtime as nn

img_np=tensor.to_numpy()

- postprocess(results):模型输出后处�理函数,该函数需要用户在任务子类重写,因为不同AI任务的后处理是不同的。

(1)results:list类型,list元素是ulab.numpy.ndarray类型,模型的推理输出。

- run(input_np):模型的前处理、推理、后处理流程,适用于单ai2d实例能解决的前处理的AI任务,其他任务需要用户在子类重写。

(1)input_np:ulab.numpy.ndarray类型,ai2d预处理输入数据;该数据通过ai2d预处理输出1个tensor,tensor通过模型推理得到输出列表results,results经过后处理过程得到AI结果。

- deinit():AIBase销毁函数。

1.2.4. ScopedTiming

ScopedTiming 类在PipeLine.py模块内,是一个用来测量代码块执行时间的上下文管理器。上下文管理器通过定义包含 __enter__ 和 __exit__ 方法的类来创建。当在 with 语句中使用该类的实例时,__enter__ 在进入 with 块时被调用,__exit__ 在离开时被调用。

from libs.PipeLine import ScopedTiming

def test_time():

with ScopedTiming("test",1):

#####代码#####

# ...

##############

1.3. 应用方法和示例

1.3.1. 概述

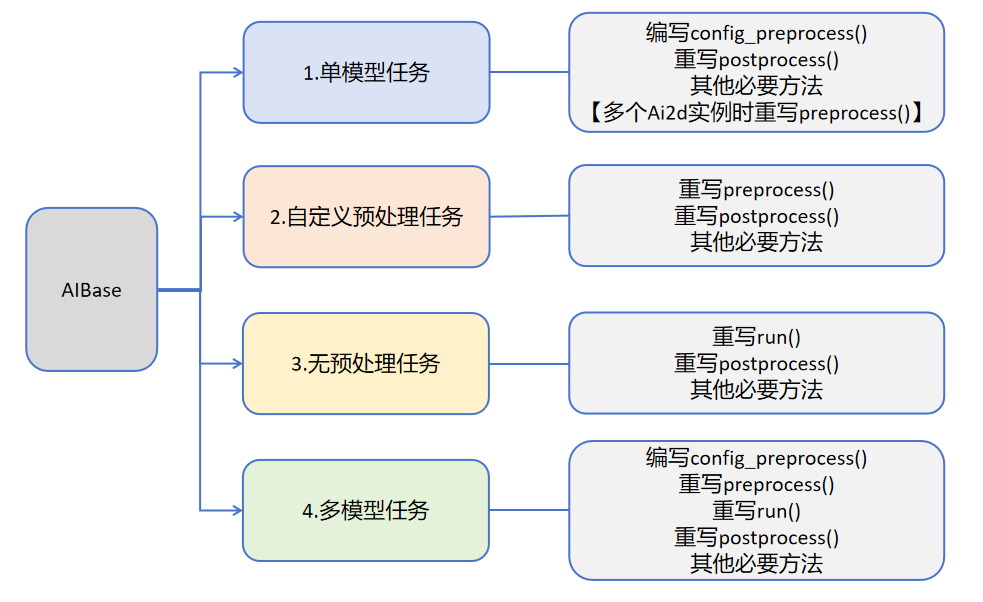

用户可根据具体的AI场景自写任务类继承AIBase,可以将任务分为如下四类:单模型任务、多模型任务,自定义预处理任务、无预处理任务。不同任务需要编写不同的代码实现,具体如下图所示:

关于不同任务的介绍:

| 任务类型 | 任务描述 | 代码说明 |

|---|---|---|

| 单模型任务 | 该任务只有一个模型,只需要关注该模型的前处理、推理、后处理过程,此类任务的前处理使用Ai2d实现,可能使用一个Ai2d实例,也可能使用多个Ai2d实例,后处理基于场景自定义。 | 编写自定义任务类,主要关注任务类的config_preprocess、postprocess、以及该任务需要的其他方法如:draw_result等。 如果该任务包含多个Ai2d实例,则需要重写preprocess,按照预处理的顺序设置预处理阶段的计算过程。 |

| 自定义预处理任务 | 该任务只有一个模型,只需要关注该模型的前处理、推理、后处理过程,此类任务的前处理不使用Ai2d实现,可以使用ulab.numpy自定义,后处理基于场景自定义。 | 编写自定义任务类,主要关注任务类的preprocess、postprocess、以及该任务需要的其他方法如:draw_result等 |

| 无预处理任务 | 该任务只有一个模型且不需要预处理,只需要关注该模型的推理和后处理过程,此类任务一般作为多模型任务的一部分,直接对前一个模型的输出做为输入推理,后处理基于需求自定义。 | 编写自定义任务类,主要关注任务类的run(模型推理的整个过程,包括preprocess、inference、postprocess中的全部或某一些步骤)、postprocess、以及该任务需要的其他方法如:draw_results等 |

| 多模型任务 | 该任务包含多个模型,可能是串联,也可能是其他组合方式。对于每个模型基本上属于前三种模型中的一种,最后通过一个完整的任务类将上述模型子任务统一起来。 | 编写多个子模型任务类,不同子模型任务参照前三种任务定义。不同任务关注不同的方法。 编写多模型任务类,将子模型任务类统一起来实现整个场景。 |

1.3.2. 单模型任务

单模型任务的伪代码结构如下:

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

import os

from media.media import *

import nncase_runtime as nn

import ulab.numpy as np

import image

import gc

import sys

# 自定义AI任务类,继承自AIBase基类

class MyAIApp(AIBase):

def __init__(self, kmodel_path, model_input_size, rgb888p_size=[224,224], display_size=[1920,1080], debug_mode=0):

# 调用基类的构造函数

super().__init__(kmodel_path, model_input_size, rgb888p_size, debug_mode)

# 模型文件路径

self.kmodel_path = kmodel_path

# 模型输入分辨率

self.model_input_size = model_input_size

# sensor给到AI的图像分辨率,并对宽度进行16的对齐

self.rgb888p_size = [ALIGN_UP(rgb888p_size[0], 16), rgb888p_size[1]]

# 显示分辨率,并对宽度进行16的对齐

self.display_size = [ALIGN_UP(display_size[0], 16), display_size[1]]

# 是否开启调试模式

self.debug_mode = debug_mode

# 实例化Ai2d,用于实现模型预处理

self.ai2d = Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8)

# 配置预处理操作,这里使用了pad和resize,Ai2d支持crop/shift/pad/resize/affine,具体代码请打开/sdcard/app/libs/AI2D.py查看

def config_preprocess(self, input_image_size=None):

with ScopedTiming("set preprocess config", self.debug_mode > 0):

# 初始化ai2d预处理配置,默认为sensor给到AI的尺寸,可以通过设置input_image_size自行修改输入尺寸

ai2d_input_size = input_image_size if input_image_size else self.rgb888p_size

# 配置resize预处理方法

self.ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

# 构建预处理流程

self.ai2d.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.model_input_size[1],self.model_input_size[0]])

# 自定义当前任务的后处理,results是模型输出array列表,需要根据实际任务重写

def postprocess(self, results):

with ScopedTiming("postprocess", self.debug_mode > 0):

pass

# 绘制结果到画面上,需要根据任务自己写

def draw_result(self, pl, dets):

with ScopedTiming("display_draw", self.debug_mode > 0):

pass

if __name__ == "__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="hdmi"

if display_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

# 设置模型路径,这里要替换成当前任务模型

kmodel_path = "example_test.kmodel"

rgb888p_size = [1920, 1080]

###### 其它参数########

...

######################

# 初始化PipeLine,用于图像处理流程

pl = PipeLine(rgb888p_size=rgb888p_size, display_size=display_size, display_mode=display_mode)

pl.create() # 创建PipeLine实例

# 初始化自定义AI任务实例

my_ai = MyAIApp(kmodel_path, model_input_size=[320, 320],rgb888p_size=rgb888p_size, display_size=display_size, debug_mode=0)

my_ai.config_preprocess() # 配置预处理

try:

while True:

os.exitpoint() # 检查是否有退出信号

with ScopedTiming("total",1):

img = pl.get_frame() # 获取当前帧数据

res = my_ai.run(img) # 推理当前帧

my_ai.draw_result(pl, res) # 绘制结果

pl.show_image() # 显示结果

gc.collect() # 垃圾回�收

except Exception as e:

sys.print_exception(e) # 打印异常信息

finally:

my_ai.deinit() # 反初始化

pl.destroy() # 销毁PipeLine实例

下面以人脸检测为例给出示例代码:

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

import os

import ujson

from media.media import *

from time import *

import nncase_runtime as nn

import ulab.numpy as np

import time

import utime

import image

import random

import gc

import sys

import aidemo

# 自定义人脸检测类,继承自AIBase基类

class FaceDetectionApp(AIBase):

def __init__(self, kmodel_path, model_input_size, anchors, confidence_threshold=0.5, nms_threshold=0.2, rgb888p_size=[224,224], display_size=[1920,1080], debug_mode=0):

super().__init__(kmodel_path, model_input_size, rgb888p_size, debug_mode) # 调用基类的构造函数

self.kmodel_path = kmodel_path # 模型文件路径

self.model_input_size = model_input_size # 模型输入分辨率

self.confidence_threshold = confidence_threshold # 置信度阈值

self.nms_threshold = nms_threshold # NMS(非极大值抑制)阈值

self.anchors = anchors # 锚点数据,用于目标检测

self.rgb888p_size = [ALIGN_UP(rgb888p_size[0], 16), rgb888p_size[1]] # sensor给到AI的图像分辨率,并对宽度进行16的对齐

self.display_size = [ALIGN_UP(display_size[0], 16), display_size[1]] # 显示分辨率,并对宽度进行16的对齐

self.debug_mode = debug_mode # 是否开启调试模式

self.ai2d = Ai2d(debug_mode) # 实例化Ai2d,用于实现模型预处理

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8) # 设置Ai2d的输入输出格式和类型

# 配置预处理操作,这里使用了pad和resize,Ai2d支持crop/shift/pad/resize/affine,具体代码请打开/sdcard/app/libs/AI2D.py查看

def config_preprocess(self, input_image_size=None):

with ScopedTiming("set preprocess config", self.debug_mode > 0): # 计时器,如果debug_mode大于0则开启

ai2d_input_size = input_image_size if input_image_size else self.rgb888p_size # 初始化ai2d预处理配置,默认为sensor给到AI的尺寸,可以通过设置input_image_size自行修改输入尺寸

top, bottom, left, right = self.get_padding_param() # 获取padding参数

self.ai2d.pad([0, 0, 0, 0, top, bottom, left, right], 0, [104, 117, 123]) # 填充边缘

self.ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel) # 缩放图像

self.ai2d.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.model_input_size[1],self.model_input_size[0]]) # 构建预处理流程

# 自定义当前任务的后处理,results是模型输出array列表,这里使用了aidemo库的face_det_post_process接口

def postprocess(self, results):

with ScopedTiming("postprocess", self.debug_mode > 0):

post_ret = aidemo.face_det_post_process(self.confidence_threshold, self.nms_threshold, self.model_input_size[1], self.anchors, self.rgb888p_size, results)

if len(post_ret) == 0:

return post_ret

else:

return post_ret[0]

# 绘制检测结果到画面上

def draw_result(self, pl, dets):

with ScopedTiming("display_draw", self.debug_mode > 0):

if dets:

pl.osd_img.clear() # 清除OSD图像

for det in dets:

# 将检测框的坐标转换为显示分辨率下的坐标

x, y, w, h = map(lambda x: int(round(x, 0)), det[:4])

x = x * self.display_size[0] // self.rgb888p_size[0]

y = y * self.display_size[1] // self.rgb888p_size[1]

w = w * self.display_size[0] // self.rgb888p_size[0]

h = h * self.display_size[1] // self.rgb888p_size[1]

pl.osd_img.draw_rectangle(x, y, w, h, color=(255, 255, 0, 255), thickness=2) # 绘制矩形框

else:

pl.osd_img.clear()

# 获取padding参数

def get_padding_param(self):

dst_w = self.model_input_size[0] # 模型输入宽度

dst_h = self.model_input_size[1] # 模型输入高度

ratio_w = dst_w / self.rgb888p_size[0] # 宽度缩放比例

ratio_h = dst_h / self.rgb888p_size[1] # 高度缩放比例

ratio = min(ratio_w, ratio_h) # 取较小的缩放比例

new_w = int(ratio * self.rgb888p_size[0]) # 新宽度

new_h = int(ratio * self.rgb888p_size[1]) # 新高度

dw = (dst_w - new_w) / 2 # 宽度差

dh = (dst_h - new_h) / 2 # 高度差

top = int(round(0))

bottom = int(round(dh * 2 + 0.1))

left = int(round(0))

right = int(round(dw * 2 - 0.1))

return top, bottom, left, right

if __name__ == "__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="hdmi"

if display_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

# 设置模型路径和其他参数

kmodel_path = "/sdcard/app/tests/kmodel/face_detection_320.kmodel"

# 其它参数

confidence_threshold = 0.5

nms_threshold = 0.2

anchor_len = 4200

det_dim = 4

anchors_path = "/sdcard/app/tests/utils/prior_data_320.bin"

anchors = np.fromfile(anchors_path, dtype=np.float)

anchors = anchors.reshape((anchor_len, det_dim))

rgb888p_size = [1920, 1080]

# 初始化PipeLine,用于图像处理流程

pl = PipeLine(rgb888p_size=rgb888p_size, display_size=display_size, display_mode=display_mode)

pl.create() # 创建PipeLine实例

# 初始化自定义人脸检测实例

face_det = FaceDetectionApp(kmodel_path, model_input_size=[320, 320], anchors=anchors, confidence_threshold=confidence_threshold, nms_threshold=nms_threshold, rgb888p_size=rgb888p_size, display_size=display_size, debug_mode=0)

face_det.config_preprocess() # 配置预处理

try:

while True:

os.exitpoint() # 检查是否有退出信号

with ScopedTiming("total",1):

img = pl.get_frame() # 获取当前帧数据

res = face_det.run(img) # 推理当前帧

face_det.draw_result(pl, res) # 绘制结果

pl.show_image() # 显示结果

gc.collect() # 垃圾回收

except Exception as e:

sys.print_exception(e) # 打印异常信息

finally:

face_det.deinit() # 反初始化

pl.destroy() # 销毁PipeLine实例

多个Ai2d实例时的伪代码如下:

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

import os

from media.media import *

import nncase_runtime as nn

import ulab.numpy as np

import image

import gc

import sys

# 自定义AI任务类,继承自AIBase基类

class MyAIApp(AIBase):

def __init__(self, kmodel_path, model_input_size, rgb888p_size=[224,224], display_size=[1920,1080], debug_mode=0):

# 调用基类的构造函数

super().__init__(kmodel_path, model_input_size, rgb888p_size, debug_mode)

# 模型文件路径

self.kmodel_path = kmodel_path

# 模型输入分辨率

self.model_input_size = model_input_size

# sensor给到AI的图像分辨率,并对宽度进行16的对齐

self.rgb888p_size = [ALIGN_UP(rgb888p_size[0], 16), rgb888p_size[1]]

# 显示分辨率,并对宽度进行16的对齐

self.display_size = [ALIGN_UP(display_size[0], 16), display_size[1]]

# 是否开启调试模式

self.debug_mode = debug_mode

# 实例化Ai2d,用于实现模型预处理

self.ai2d_resize = Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d_resize.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8)

# 实例化Ai2d,用于实现模型预处理

self.ai2d_resize = Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d_resize.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8)

# 实例化Ai2d,用于实现模型预处理

self.ai2d_crop = Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d_crop.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8)

# 配置预处理操作,这里使用了pad和resize,Ai2d支持crop/shift/pad/resize/affine,具体代码请打开/sdcard/app/libs/AI2D.py查看

def config_preprocess(self, input_image_size=None):

with ScopedTiming("set preprocess config", self.debug_mode > 0):

# 初始化ai2d预处理配置,默认为sensor给到AI的尺寸,可以通过设置input_image_size自行修改输入尺寸

ai2d_input_size = input_image_size if input_image_size else self.rgb888p_size

# 配置resize预处理方法

self.ai2d_resize.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

# 构建预处理流程

self.ai2d_resize.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,640,640])

# 配置crop预处理方法

self.ai2d_crop.crop(0,0,320,320)

# 构建预处理流程

self.ai2d_crop.build([1,3,640,640],[1,3,320,320])

# 假设该任务需要crop和resize预处理,顺序是先resize再crop,该顺序不符合ai2d的处理顺序,因此需要设置两个Ai2d实例分别处理

def preprocess(self,input_np):

resize_tensor=self.ai2d_resize.run(input_np)

resize_np=resize_tensor.to_numpy()

crop_tensor=self.ai2d_crop.run(resize_np)

return [crop_tensor]

# 自定义当前任务的后处理,results是模型输出array列表,需要根据实际任务重写

def postprocess(self, results):

with ScopedTiming("postprocess", self.debug_mode > 0):

pass

# 绘制结果到画面上,需要根据任务自己写

def draw_result(self, pl, dets):

with ScopedTiming("display_draw", self.debug_mode > 0):

pass

# 重写deinit,释放多个ai2d资源

def deinit(self):

with ScopedTiming("deinit",self.debug_mode > 0):

del self.ai2d_resize

del self.ai2d_crop

super().deinit()

if __name__ == "__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="hdmi"

if display_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

# 设置模型路径,这里要替换成当前任务模型

kmodel_path = "example_test.kmodel"

rgb888p_size = [1920, 1080]

###### 其它参数########

...

######################

# 初始化PipeLine,用于图像处理流程

pl = PipeLine(rgb888p_size=rgb888p_size, display_size=display_size, display_mode=display_mode)

pl.create() # 创建PipeLine实例

# 初始化自定义AI任务实例

my_ai = MyAIApp(kmodel_path, model_input_size=[320, 320],rgb888p_size=rgb888p_size, display_size=display_size, debug_mode=0)

my_ai.config_preprocess() # �配置预处理

try:

while True:

os.exitpoint() # 检查是否有退出信号

with ScopedTiming("total",1):

img = pl.get_frame() # 获取当前帧数据

res = my_ai.run(img) # 推理当前帧

my_ai.draw_result(pl, res) # 绘制结果

pl.show_image() # 显示结果

gc.collect() # 垃圾回收

except Exception as e:

sys.print_exception(e) # 打印异常信息

finally:

my_ai.deinit() # 反初始化

pl.destroy() # 销毁PipeLine实例

1.3.3. 自定义预处理任务

对于需要重写前处理(不使用提供的ai2d类,自己手动写预处理)的AI任务伪代码如下:

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

import os

from media.media import *

import nncase_runtime as nn

import ulab.numpy as np

import image

import gc

import sys

# 自定义AI任务类,继承自AIBase基类

class MyAIApp(AIBase):

def __init__(self, kmodel_path, model_input_size, rgb888p_size=[224,224], display_size=[1920,1080], debug_mode=0):

# 调用基类的构造函数

super().__init__(kmodel_path, model_input_size, rgb888p_size, debug_mode)

# 模型文件路径

self.kmodel_path = kmodel_path

# 模型输入分辨率

self.model_input_size = model_input_size

# sensor给到AI的图像分�辨率,并对宽度进行16的对齐

self.rgb888p_size = [ALIGN_UP(rgb888p_size[0], 16), rgb888p_size[1]]

# 显示分辨率,并对宽度进行16的对齐

self.display_size = [ALIGN_UP(display_size[0], 16), display_size[1]]

# 是否开启调试模式

self.debug_mode = debug_mode

# 实例化Ai2d,用于实现模型预处理

self.ai2d = Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8)

# 对于不使用ai2d完成预处理的AI任务,使用封装的接口或者ulab.numpy实现预处理,需要在子类中重写该函数

def preprocess(self,input_np):

#############

#注意自定义预处理过程

#############

return [tensor]

# 自定义当前任务的后处理,results是模型输出array列表,需要根据实际任务重写

def postprocess(self, results):

with ScopedTiming("postprocess", self.debug_mode > 0):

pass

# 绘制结果到画面上,需要根据任务自己写

def draw_result(self, pl, dets):

with ScopedTiming("display_draw", self.debug_mode > 0):

pass

if __name__ == "__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="hdmi"

if display_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

# 设置模型路径,这里要替换成当前任务模型

kmodel_path = "example_test.kmodel"

rgb888p_size = [1920, 1080]

###### 其它参数########

...

######################

# 初始化PipeLine,用于图像处理流程

pl = PipeLine(rgb888p_size=rgb888p_size, display_size=display_size, display_mode=display_mode)

pl.create() # 创建PipeLine实例

# 初始化自定义AI任务实例

my_ai = MyAIApp(kmodel_path, model_input_size=[320, 320],rgb888p_size=rgb888p_size, display_size=display_size, debug_mode=0)

my_ai.config_preprocess() # 配置预处理

try:

while True:

os.exitpoint() # 检查是否有退出信号

with ScopedTiming("total",1):

img = pl.get_frame() # 获取当前帧数据

res = my_ai.run(img) # 推理当前帧

my_ai.draw_result(pl, res) # 绘制结果

pl.show_image() # 显示结果

gc.collect() # 垃圾回收

except Exception as e:

sys.print_exception(e) # 打印异常信息

finally:

my_ai.deinit() # 反初始化

pl.destroy() # 销毁PipeLine实例

以关键词唤醒keyword_spotting为例:

from libs.PipeLine import ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

from media.pyaudio import * # 音频模块

from media.media import * # 软件抽象模块,主要封装媒体数据链路以及媒体缓冲区

import media.wave as wave # wav音频处理模块

import nncase_runtime as nn # nncase运行模块,封装了kpu(kmodel推理)和ai2d(图片预处理加速)操作

import ulab.numpy as np # 类似python numpy操作,但也会有一些接口不同

import aidemo # aidemo模块,封装ai demo相关前处理、后处理等操作

import time # 时间统计

import struct # 字节字符转换模块

import gc # 垃圾回收模块

import os,sys # 操作系统接口模块

# 自定义关键词唤醒类,继承自AIBase基类

class KWSApp(AIBase):

def __init__(self, kmodel_path, threshold, debug_mode=0):

super().__init__(kmodel_path) # 调用基类的构造函数

self.kmodel_path = kmodel_path # 模型文件路径

self.threshold=threshold

self.debug_mode = debug_mode # 是否开启调试模式

self.cache_np = np.zeros((1, 256, 105), dtype=np.float)

# 自定义预处理,返回模型输入tensor列表

def preprocess(self,pcm_data):

pcm_data_list=[]

# 获取音频流数据

for i in range(0, len(pcm_data), 2):

# 每两个字节组织成一个有符号整数,然后将其转换为浮点数,即为一次采样的数据,加入到当前一帧(0.3s)的数据列表中

int_pcm_data = struct.unpack("<h", pcm_data[i:i+2])[0]

float_pcm_data = float(int_pcm_data)

pcm_data_list.append(float_pcm_data)

# 将pcm数据处理为模型输入的特征向量

mp_feats = aidemo.kws_preprocess(fp, pcm_data_list)[0]

mp_feats_np = np.array(mp_feats).reshape((1, 30, 40))

audio_input_tensor = nn.from_numpy(mp_feats_np)

cache_input_tensor = nn.from_numpy(self.cache_np)

return [audio_input_tensor,cache_input_tensor]

# 自定义当前任务的后处理,results是模型输出array列表

def postprocess(self, results):

with ScopedTiming("postprocess", self.debug_mode > 0):

logits_np = results[0]

self.cache_np= results[1]

max_logits = np.max(logits_np, axis=1)[0]

max_p = np.max(max_logits)

idx = np.argmax(max_logits)

# 如果分数大于阈值,且idx==1(即包含唤醒词),播放回复音频

if max_p > self.threshold and idx == 1:

return 1

else:

return 0

if __name__ == "__main__":

os.exitpoint(os.EXITPOINT_ENABLE)

nn.shrink_memory_pool()

# 设置模型路径和其他参数

kmodel_path = "/sdcard/app/tests/kmodel/kws.kmodel"

# 其��它参数

THRESH = 0.5 # 检测阈值

SAMPLE_RATE = 16000 # 采样率16000Hz,即每秒采样16000次

CHANNELS = 1 # 通道数 1为单声道,2为立体声

FORMAT = paInt16 # 音频输入输出格式 paInt16

CHUNK = int(0.3 * 16000) # 每次读取音频数据的帧数,设置为0.3s的帧数16000*0.3=4800

reply_wav_file = "/sdcard/app/tests/utils/wozai.wav" # kws唤醒词回复音频路径

# 初始化音频预处理接口

fp = aidemo.kws_fp_create()

# 初始化音频流

p = PyAudio()

p.initialize(CHUNK)

MediaManager.init() #vb buffer初始化

# 用于采集实时音频数据

input_stream = p.open(format=FORMAT,channels=CHANNELS,rate=SAMPLE_RATE,input=True,frames_per_buffer=CHUNK)

# 用于播放回复音频

output_stream = p.open(format=FORMAT,channels=CHANNELS,rate=SAMPLE_RATE,output=True,frames_per_buffer=CHUNK)

# 初始化自定义关键词唤醒实例

kws = KWSApp(kmodel_path,threshold=THRESH,debug_mode=0)

try:

while True:

os.exitpoint() # 检查是否有退出信号

with ScopedTiming("total",1):

pcm_data=input_stream.read()

res=kws.run(pcm_data)

if res:

print("====Detected XiaonanXiaonan!====")

wf = wave.open(reply_wav_file, "rb")

wav_data = wf.read_frames(CHUNK)

while wav_data:

output_stream.write(wav_data)

wav_data = wf.read_frames(CHUNK)

time.sleep(1) # 时间缓冲,用于播放回复声音

wf.close()

else:

print("Deactivated!")

gc.collect() # 垃圾回收

except Exception as e:

sys.print_exception(e) # 打印异常信息

finally:

input_stream.stop_stream()

output_stream.stop_stream()

input_stream.close()

output_stream.close()

p.terminate()

MediaManager.deinit() #释放vb buffer

aidemo.kws_fp_destroy(fp)

kws.deinit() # 反初始化

1.3.4. 无预处理任务

对于不需要预处理(直接输入推理)的AI任务伪代码如下:

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

import os

from media.media import *

import nncase_runtime as nn

import ulab.numpy as np

import image

import gc

import sys

# 自定义AI任务类,继承自AIBase基类

class MyAIApp(AIBase):

def __init__(self, kmodel_path, model_input_size, rgb888p_size=[224,224], display_size=[1920,1080], debug_mode=0):

# 调用基类的构造函数

super().__init__(kmodel_path, model_input_size, rgb888p_size, debug_mode)

# 模型文件路径

self.kmodel_path = kmodel_path

# 模型输入分辨率

self.model_input_size = model_input_size

# sensor给到AI的图像分辨率,并对宽度进行16的对齐

self.rgb888p_size = [ALIGN_UP(rgb888p_size[0], 16), rgb888p_size[1]]

# 显示分辨率,并对宽度进行16的对齐

self.display_size = [ALIGN_UP(display_size[0], 16), display_size[1]]

# 是否开启调试模式

self.debug_mode = debug_mode

# 自定义当前任务的后处理,results是模型输出array列表,需要根据实际任务重写

def postprocess(self, results):

with ScopedTiming("postprocess", self.debug_mode > 0):

pass

# 对于用预处理的AI任务,需要在子类中重写该函数

def run(self,inputs_np):

# 先将ulab.numpy.ndarray列表转换成tensor列表

tensors=[]

for input_np in inputs_np:

tensors.append(nn.from_numpy(input_np))

# 调用AIBase内的inference函数进行模型推理

results=self.inference(tensors)

# 调用当前子类的postprocess方法进行自定义后处理

outputs=self.postprocess(results)

return outputs

# 绘制结果到画面上,需要根据任务自己写

def draw_result(self, pl, dets):

with ScopedTiming("display_draw", self.debug_mode > 0):

pass

if __name__ == "__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="hdmi"

if display_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

# 设置模型路径,这里要替换成当前任务模型

kmodel_path = "example_test.kmodel"

rgb888p_size = [1920, 1080]

###### 其它参数########

...

######################

# 初始化PipeLine,用于图像处理流程

pl = PipeLine(rgb888p_size=rgb888p_size, display_size=display_size, display_mode=display_mode)

pl.create() # 创建PipeLine实例

# 初始化自定义AI任务实例

my_ai = MyAIApp(kmodel_path, model_input_size=[320, 320],rgb888p_size=rgb888p_size, display_size=display_size, debug_mode=0)

my_ai.config_preprocess() # 配置预处理

try:

while True:

os.exitpoint() # 检查是否有退出信号

with ScopedTiming("total",1):

img = pl.get_frame() # 获取当前帧数据

res = my_ai.run(img) # 推理当前帧

my_ai.draw_result(pl, res) # 绘制结果

pl.show_image() # 显示结果

gc.collect() # 垃圾回收

except Exception as e:

sys.print_exception(e) # 打印异常信息

finally:

my_ai.deinit() # 反初始化

pl.destroy() # 销毁PipeLine实例

比如单目标跟踪(nanotracker.py)中的追踪模块,只需要对模版模型和实时推理模型的输出作为追踪模型的输入,不需要预处理:

class TrackerApp(AIBase):

def __init__(self,kmodel_path,crop_input_size,thresh,rgb888p_size=[1280,720],display_size=[1920,1080],debug_mode=0):

super().__init__(kmodel_path,rgb888p_size,debug_mode)

# kmodel路径

self.kmodel_path=kmodel_path

# crop模型的输入尺寸

self.crop_input_size=crop_input_size

# 跟踪框阈值

self.thresh=thresh

# 跟踪框宽、高调整系数

self.CONTEXT_AMOUNT = 0.5

# sensor给到AI的图像分辨率,宽16字节对齐

self.rgb888p_size=[ALIGN_UP(rgb888p_size[0],16),rgb888p_size[1]]

# 视频输出VO分辨率,宽16字节对齐

self.display_size=[ALIGN_UP(display_size[0],16),display_size[1]]

# debug模式

self.debug_mode=debug_mode

# 可以不定义

self.ai2d=Ai2d(debug_mode)

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT,nn.ai2d_format.NCHW_FMT,np.uint8, np.uint8)

# 可以不定义

def config_preprocess(self,input_image_size=None):

with ScopedTiming("set preprocess config",self.debug_mode > 0):

pass

# 重写run函数,因为没有预处理过程,所以原来run操作中包含的preprocess->inference->postprocess不合适,这里只包含inference->postprocess

def run(self,input_np_1,input_np_2,center_xy_wh):

input_tensors=[]

input_tensors.append(nn.from_numpy(input_np_1))

input_tensors.append(nn.from_numpy(input_np_2))

results=self.inference(input_tensors)

return self.postprocess(results,center_xy_wh)

# 自定义后处理,results是模型输出array的列表,这里使用了aidemo的nanotracker_postprocess列表

def postprocess(self,results,center_xy_wh):

with ScopedTiming("postprocess",self.debug_mode > 0):

det = aidemo.nanotracker_postprocess(results[0],results[1],[self.rgb888p_size[1],self.rgb888p_size[0]],self.thresh,center_xy_wh,self.crop_input_size[0],self.CONTEXT_AMOUNT)

return det

1.3.5. 多模型任务

这里以双模型串联推理为例,给出的伪代码如下:

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

import os

from media.media import *

import nncase_runtime as nn

import ulab.numpy as np

import image

import gc

import sys

# 自定义AI任务类,继承自AIBase基类

class MyAIApp_1(AIBase):

def __init__(self, kmodel_path, model_input_size, rgb888p_size=[224,224], display_size=[1920,1080], debug_mode=0):

# 调用基类的构造函数

super().__init__(kmodel_path, model_input_size, rgb888p_size, debug_mode)

# 模型文件路径

self.kmodel_path = kmodel_path

# 模型输入分辨率

self.model_input_size = model_input_size

# sensor给到AI的图像分辨率,并对宽度进行16的对齐

self.rgb888p_size = [ALIGN_UP(rgb888p_size[0], 16), rgb888p_size[1]]

# 显示分辨率,并对宽度进行16的�对齐

self.display_size = [ALIGN_UP(display_size[0], 16), display_size[1]]

# 是否开启调试模式

self.debug_mode = debug_mode

# 实例化Ai2d,用于实现模型预处理

self.ai2d = Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8)

# 配置预处理操作,这里使用了pad和resize,Ai2d支持crop/shift/pad/resize/affine,具体代码请打开/sdcard/app/libs/AI2D.py查看

def config_preprocess(self, input_image_size=None):

with ScopedTiming("set preprocess config", self.debug_mode > 0):

# 初始化ai2d预处理配置,默认为sensor给到AI的尺寸,可以通过设置input_image_size自行修改输入尺寸

ai2d_input_size = input_image_size if input_image_size else self.rgb888p_size

# 配置resize预处理方法

self.ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

# 构建预处理流程

self.ai2d.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.model_input_size[1],self.model_input_size[0]])

# 自定义当前任务的后处理,results是模型输出array列表,需要根据实际任务重写

def postprocess(self, results):

with ScopedTiming("postprocess", self.debug_mode > 0):

pass

# 自定义AI任务类,继承自AIBase基类

class MyAIApp_2(AIBase):

def __init__(self, kmodel_path, model_input_size, rgb888p_size=[224,224], display_size=[1920,1080], debug_mode=0):

# 调用基类的构造函数

super().__init__(kmodel_path, model_input_size, rgb888p_size, debug_mode)

# 模型文件路径

self.kmodel_path = kmodel_path

# 模型输入分辨率

self.model_input_size = model_input_size

# sensor给到AI的图像分辨率,并对宽度进行16的对齐

self.rgb888p_size = [ALIGN_UP(rgb888p_size[0], 16), rgb888p_size[1]]

# 显示分辨率,并对宽度进行16的对齐

self.display_size = [ALIGN_UP(display_size[0], 16), display_size[1]]

# 是否开启调试模式

self.debug_mode = debug_mode

# 实例化Ai2d,用于实现模型预处理

self.ai2d = Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8)

# 配置预处理操作,这里使用了pad和resize,Ai2d支持crop/shift/pad/resize/affine,具体代码请打开/sdcard/app/libs/AI2D.py查看

def config_preprocess(self, input_image_size=None):

with ScopedTiming("set preprocess config", self.debug_mode > 0):

# 初始化ai2d预处理配置,默认为sensor给到AI的尺寸,可以通过设置input_image_size自行修改输入尺寸

ai2d_input_size = input_image_size if input_image_size else self.rgb888p_size

# 配置resize预处理方法

self.ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

# 构建预处理流程

self.ai2d.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.model_input_size[1],self.model_input_size[0]])

# 自定义当前任务的后处理,results是模型输出array列表,需要根据实际任务重写

def postprocess(self, results):

with ScopedTiming("postprocess", self.debug_mode > 0):

pass

class MyApp:

def __init__(kmodel1_path,kmodel2_path,kmodel1_input_size,kmodel2_input_size,rgb888p_size,display_size,debug_mode):

# 创建两个模型推理的实例

self.app_1=MyApp_1(kmodel1_path,kmodel1_input_size,rgb888p_size,display_size,debug_mode)

self.app_2=MyApp_2(kmodel2_path,kmodel2_input_size,rgb888p_size,display_size,debug_mode)

self.app_1.config_preprocess()

# 编写run函数,具体代码根据AI任务的需求编写,此处只是给出一个示例

def run(self,input_np):

outputs_1=self.app_1.run(input_np)

outputs_2=[]

for out in outputs_1:

self.app_2.config_preprocess(out)

out_2=self.app_2.run(input_np)

outputs_2.append(out_2)

return outputs_1,outputs_2

# 绘制

def draw_result(self,pl,outputs_1,outputs_2):

pass

######其他函数########

...

####################

if __name__ == "__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="hdmi"

if display_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

rgb888p_size = [1920, 1080]

# 设置模型路径,这里要替换成当前任务模型

kmodel1_path = "test_kmodel1.kmodel"

kmdoel1_input_size=[320,320]

kmodel2_path = "test_kmodel2.kmodel"

kmodel2_input_size=[48,48]

###### 其它参数########

...

######################

# 初始化PipeLine,用于图像处理流程

pl = PipeLine(rgb888p_size=rgb888p_size, display_size=display_size, display_mode=display_mode)

pl.create() # 创建PipeLine实例

# 初始化自定义AI任务实例

my_ai = MyApp(kmodel1_path,kmodel2_path, kmodel1_input_size,kmodel2_input_size,rgb888p_size=rgb888p_size, display_size=display_size, debug_mode=0)

my_ai.config_preprocess() # 配置预处理

try:

while True:

os.exitpoint() # 检查是否有退出信号

with ScopedTiming("total",1):

img = pl.get_frame() # 获取当前帧数据

outputs_1,outputs_2 = my_ai.run(img) # 推理当前帧

my_ai.draw_result(pl, outputs_1,outputs_2) # 绘制结果

pl.show_image() # 显示结果

gc.collect() # 垃圾回收

except Exception as e:

sys.print_exception(e) # 打印异常信息

finally:

my_ai.app_1.deinit() # 反初始化

my_ai.app_2.deinit()

pl.destroy() # 销毁PipeLine实例

下面以车牌检测为例给出示例代码:

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

import os

import ujson

from media.media import *

from time import *

import nncase_runtime as nn

import ulab.numpy as np

import time

import image

import aidemo

import random

import gc

import sys

# 自定义车牌检测类

class LicenceDetectionApp(AIBase):

# 初始化函数,设置车牌检测应用的参数

def __init__(self, kmodel_path, model_input_size, confidence_threshold=0.5, nms_threshold=0.2, rgb888p_size=[224,224], display_size=[1920,1080], debug_mode=0):

super().__init__(kmodel_path, model_input_size, rgb888p_size, debug_mode) # 调用基类的初始化函数

self.kmodel_path = kmodel_path # 模型路径

# 模型输入分辨率

self.model_input_size = model_input_size

# 分类阈值

self.confidence_threshold = confidence_threshold

self.nms_threshold = nms_threshold

# sensor给到AI的图像分辨率

self.rgb888p_size = [ALIGN_UP(rgb888p_size[0], 16), rgb888p_size[1]]

# 显示分辨率

self.display_size = [ALIGN_UP(display_size[0], 16), display_size[1]]

self.debug_mode = debug_mode

# Ai2d实例,用于实现模型预处理

self.ai2d = Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT, nn.ai2d_format.NCHW_FMT, np.uint8, np.uint8)

# 配置预处理操作,这里使用了pad和resize,Ai2d支持crop/shift/pad/resize/affine

def config_preprocess(self, input_image_size=None):

with ScopedTiming("set preprocess config", self.debug_mode > 0):

# 初始化ai2d预处理配置,默认为sensor给到AI的尺寸,可以通过设置input_image_size自行修改输入尺寸

ai2d_input_size = input_image_size if input_image_size else self.rgb888p_size

self.ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

self.ai2d.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.model_input_size[1],self.model_input_size[0]])

# 自定义当前任务的后处理

def postprocess(self, results):

with ScopedTiming("postprocess", self.debug_mode > 0):

# 对检测结果进行后处理

det_res = aidemo.licence_det_postprocess(results, [self.rgb888p_size[1], self.rgb888p_size[0]], self.model_input_size, self.confidence_threshold, self.nms_threshold)

return det_res

# 自定义��车牌识别任务类

class LicenceRecognitionApp(AIBase):

def __init__(self,kmodel_path,model_input_size,rgb888p_size=[1920,1080],display_size=[1920,1080],debug_mode=0):

super().__init__(kmodel_path,model_input_size,rgb888p_size,debug_mode)

# kmodel路径

self.kmodel_path=kmodel_path

# 检测模型输入分辨率

self.model_input_size=model_input_size

# sensor给到AI的图像分辨率,宽16字节对齐

self.rgb888p_size=[ALIGN_UP(rgb888p_size[0],16),rgb888p_size[1]]

# 视频输出VO分辨率,宽16字节对齐

self.display_size=[ALIGN_UP(display_size[0],16),display_size[1]]

# debug模式

self.debug_mode=debug_mode

# 车牌字符字典

self.dict_rec = ["挂", "使", "领", "澳", "港", "皖", "沪", "津", "渝", "冀", "晋", "蒙", "辽", "吉", "黑", "苏", "浙", "京", "闽", "赣", "鲁", "豫", "鄂", "湘", "粤", "桂", "琼", "川", "贵", "云", "藏", "陕", "甘", "青", "宁", "新", "警", "学", "0", "1", "2", "3", "4", "5", "6", "7", "8", "9", "A", "B", "C", "D", "E", "F", "G", "H", "J", "K", "L", "M", "N", "P", "Q", "R", "S", "T", "U", "V", "W", "X", "Y", "Z", "_", "-"]

self.dict_size = len(self.dict_rec)

self.ai2d=Ai2d(debug_mode)

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT,nn.ai2d_format.NCHW_FMT,np.uint8, np.uint8)

# 配置预处理操作,这里使用了resize,Ai2d支持crop/shift/pad/resize/affine

def config_preprocess(self,input_image_size=None):

with ScopedTiming("set preprocess config",self.debug_mode > 0):

ai2d_input_size=input_image_size if input_image_size else self.rgb888p_size

self.ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

self.ai2d.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.model_input_size[1],self.model_input_size[0]])

# 自定义后处理,results是模型输出的array列表

def postprocess(self,results):

with ScopedTiming("postprocess",self.debug_mode > 0):

output_data=results[0].reshape((-1,self.dict_size))

max_indices = np.argmax(output_data, axis=1)

result_str = ""

for i in range(max_indices.shape[0]):

index = max_indices[i]

if index > 0 and (i == 0 or index != max_indices[i - 1]):

result_str += self.dict_rec[index - 1]

return result_str

# 车牌识别任务类

class LicenceRec:

def __init__(self,licence_det_kmodel,licence_rec_kmodel,det_input_size,rec_input_size,confidence_threshold=0.25,nms_threshold=0.3,rgb888p_size=[1920,1080],display_size=[1920,1080],debug_mode=0):

# 车牌检测模型路径

self.licence_det_kmodel=licence_det_kmodel

# 车牌识别模型路径

self.licence_rec_kmodel=licence_rec_kmodel

# 人脸检测模型输入分辨率

self.det_input_size=det_input_size

# 人脸姿态模型输入分辨率

self.rec_input_size=rec_input_size

# 置信度阈值

self.confidence_threshold=confidence_threshold

# nms阈值

self.nms_threshold=nms_threshold

# sensor给到AI的图像分辨率,宽16字节对齐

self.rgb888p_size=[ALIGN_UP(rgb888p_size[0],16),rgb888p_size[1]]

# 视频输出VO分辨率,宽16字节对齐

self.display_size=[ALIGN_UP(display_size[0],16),display_size[1]]

# debug_mode模式

self.debug_mode=debug_mode

self.licence_det=LicenceDetectionApp(self.licence_det_kmodel,model_input_size=self.det_input_size,confidence_threshold=self.confidence_threshold,nms_threshold=self.nms_threshold,rgb888p_size=self.rgb888p_size,display_size=self.display_size,debug_mode=0)

self.licence_rec=LicenceRecognitionApp(self.licence_rec_kmodel,model_input_size=self.rec_input_size,rgb888p_size=self.rgb888p_size)

self.licence_det.config_preprocess()

# run函数

def run(self,input_np):

# 执行车牌检测

det_boxes=self.licence_det.run(input_np)

# 将车牌部分抠出来

imgs_array_boxes = aidemo.ocr_rec_preprocess(input_np,[self.rgb888p_size[1],self.rgb888p_size[0]],det_boxes)

imgs_array = imgs_array_boxes[0]

boxes = imgs_array_boxes[1]

rec_res = []

for img_array in imgs_array:

# 对每一个检测到的车牌进行识别

self.licence_rec.config_preprocess(input_image_size=[img_array.shape[3],img_array.shape[2]])

licence_str=self.licence_rec.run(img_array)

rec_res.append(licence_str)

gc.collect()

return det_boxes,rec_res

# 绘制车牌检测识别效果

def draw_result(self,pl,det_res,rec_res):

pl.osd_img.clear()

if det_res:

point_8 = np.zeros((8),dtype=np.int16)

for det_index in range(len(det_res)):

for i in range(4):

x = det_res[det_index][i * 2 + 0]/self.rgb888p_size[0]*self.display_size[0]

y = det_res[det_index][i * 2 + 1]/self.rgb888p_size[1]*self.display_size[1]

point_8[i * 2 + 0] = int(x)

point_8[i * 2 + 1] = int(y)

for i in range(4):

pl.osd_img.draw_line(point_8[i * 2 + 0],point_8[i * 2 + 1],point_8[(i+1) % 4 * 2 + 0],point_8[(i+1) % 4 * 2 + 1],color=(255, 0, 255, 0),thickness=4)

pl.osd_img.draw_string_advanced( point_8[6], point_8[7] + 20, 40,rec_res[det_index] , color=(255,255,153,18))

if __name__=="__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="hdmi"

if display_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

# 车牌检测模型路径

licence_det_kmodel_path="/sdcard/app/tests/kmodel/LPD_640.kmodel"

# 车牌识别模型路径

licence_rec_kmodel_path="/sdcard/app/tests/kmodel/licence_reco.kmodel"

# 其它参数

rgb888p_size=[640,360]

licence_det_input_size=[640,640]

licence_rec_input_size=[220,32]

confidence_threshold=0.2

nms_threshold=0.2

# 初始化PipeLine,只关注传给AI的图像分辨率,显示的分辨率

pl=PipeLine(rgb888p_size=rgb888p_size,display_size=display_size,display_mode=display_mode)

pl.create()

lr=LicenceRec(licence_det_kmodel_path,licence_rec_kmodel_path,det_input_size=licence_det_input_size,rec_input_size=licence_rec_input_size,confidence_threshold=confidence_threshold,nms_threshold=nms_threshold,rgb888p_size=rgb888p_size,display_size=display_size)

try:

while True:

os.exitpoint()

with ScopedTiming("total",1):

img=pl.get_frame() # 获取当前帧

det_res,rec_res=lr.run(img) # 推理当前帧

lr.draw_result(pl,det_res,rec_res) # 绘制当前帧推理结果

pl.show_image() # 展示推理结果

gc.collect()

except Exception as e:

sys.print_exception(e)

finally:

lr.licence_det.deinit()

lr.licence_rec.deinit()

pl.destroy()

1.4. 参考文档

1.4.1. k230 canmv文档

文档链接:Welcome to K230 CanMV’s documentation! — K230 CanMV 文档 (canaan-creative.com)

1.4.2. Ulab库支持

链接: ulab – Manipulate numeric data similar to numpy — Adafruit CircuitPython 9.1.0-beta.3 documentation

2. AI Demo

AI Demo分为两种类型:单模型、多模型,涵盖物体、人脸、人手、人体、车牌、OCR、音频(KWS、TTS)等方向;参考该文档,k230用户可以更快上手K230 AI应用的开发,实现预期效果。

| Demo名称 | 场景 | 任务类型 |

|---|---|---|

| dynamic_gesture | 动态手势识别 | 多模型任务 |

| eye_gaze | 注视估计 | 多模型任务 |

| face_detection | 人脸检测 | 单模型任务 |

| face_landmark | 人脸关键部位 | 多模型任务 |

| face_mesh | 人脸3D网格 | 多模型任务 |

| face_parse | 人脸解析 | 多模型任务 |

| face_pose | 人脸姿态 | 多模型任务 |

| face_recognition | 人脸识别 | 多模型任务 |

| face_registration | 人脸注册 | 多模型任务 |

| falldown_detection | 跌倒检测 | 单模型任务 |

| finger_guessing | 猜拳游戏 | 多模型任务 |

| hand_detection | 手掌检测 | 单模型任务 |

| hand_keypoint_class | 手掌关键点分类 | 多模型任务 |

| hand_keypoint_detection | 手掌关键点检测 | 多模型任务 |

| hand_recognition | 手势识别 | 多模型任务 |

| keyword_spotting | 关键词唤醒 | 单模型任务 |

| licence_det | 车牌检测 | 单模型任务 |

| licence_det_rec | 车牌识别 | 多模型任务 |

| nanotracker | 单目标跟踪 | 多模型任务 |

| object_detect_yolov8n | yolov8n目标检测 | 单模型任务 |

| ocr_det | OCR检测 | 单模型任务 |

| ocr_rec | OCR识别 | 多模型任务 |

| person_detection | 人体检测 | 单模型任务 |

| person_kp_detect | 人体关键点检测 | 多模型任务 |

| puzzle_game | 拼图游戏 | 多模型任务 |

| segment_yolov8n | yolov8分割 | 单模型任务 |

| self_learning | 自学习 | 单模型任务 |

| space_resize | 局部放大器 | 多模型任务 |

| tts_zh | 中文文本转语音 | 多模型任务 |

2.1. 动态手势识别

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

from random import randint

import os

import ujson

from media.media import *

from time import *

import nncase_runtime as nn

import ulab.numpy as np

import time

import image

import aicube

import random

import gc

import sys

# 自定义手掌检测任务类

class HandDetApp(AIBase):

def __init__(self,kmodel_path,labels,model_input_size,anchors,confidence_threshold=0.2,nms_threshold=0.5,nms_option=False, strides=[8,16,32],rgb888p_size=[1920,1080],display_size=[1920,1080],debug_mode=0):

super().__init__(kmodel_path,model_input_size,rgb888p_size,debug_mode)

# kmodel路径

self.kmodel_path=kmodel_path

# 检测标签

self.labels=labels

# 检测模型输入分辨率

self.model_input_size=model_input_size

# 置信度阈值

self.confidence_threshold=confidence_threshold

# nms阈值

self.nms_threshold=nms_threshold

# 检测锚框

self.anchors=anchors

self.strides = strides # 特征下采样倍数

self.nms_option = nms_option # NMS选项,如果为True做类间NMS,如果为False做类内NMS

# sensor给到AI的图像分辨率,宽16字节对齐

self.rgb888p_size=[ALIGN_UP(rgb888p_size[0],16),rgb888p_size[1]]

# 视频输出VO分辨率,宽16字节对齐

self.display_size=[ALIGN_UP(display_size[0],16),display_size[1]]

# debug模式

self.debug_mode=debug_mode

# Ai2d实例,用于实现模型预处理

self.ai2d=Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT,nn.ai2d_format.NCHW_FMT,np.uint8, np.uint8)

# 配置预处理操作,这里使用了padding和resize,Ai2d支持crop/shift/pad/resize/affine,具体代码请打开/sdcard/app/libs/AI2D.py查看

def config_preprocess(self,input_image_size=None):

with ScopedTiming("set preprocess config",self.debug_mode > 0):

# 初始化ai2d预处理配置,默认为sensor给到AI的尺寸,可以通过设置input_image_size自行修改输入尺寸

ai2d_input_size = input_image_size if input_image_size else self.rgb888p_size

# 计算padding参数并应用pad操作,以确保输入图像尺寸与模型输入尺寸匹配

top, bottom, left, right = self.get_padding_param()

self.ai2d.pad([0, 0, 0, 0, top, bottom, left, right], 0, [114, 114, 114])

# 使用双线性插值进行resize操作,调整图像尺寸以符合模型输入要求

self.ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

# 构建预处理流程,参数为预处理输入tensor的shape和预处理输出的tensor的shape

self.ai2d.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.model_input_size[1],self.model_input_size[0]])

# 自定义后处理过程,这里使用了aicube的anchorbasedet_post_process接口

def postprocess(self,results):

with ScopedTiming("postprocess",self.debug_mode > 0):

dets = aicube.anchorbasedet_post_process(results[0], results[1], results[2], self.model_input_size, self.rgb888p_size, self.strides, len(self.labels), self.confidence_threshold, self.nms_threshold, self.anchors, self.nms_option)

# 返回手掌检测结果

return dets

# 计算padding参数,确保输入图像尺寸与模型输入尺寸匹配

def get_padding_param(self):

# 根据目标宽度和高度计算比例因子

dst_w = self.model_input_size[0]

dst_h = self.model_input_size[1]

input_width = self.rgb888p_size[0]

input_high = self.rgb888p_size[1]

ratio_w = dst_w / input_width

ratio_h = dst_h / input_high

# 选择较小的比例因子,以确保图像内容完整

if ratio_w < ratio_h:

ratio = ratio_w

else:

ratio = ratio_h

# 计算新的宽度和高度

new_w = int(ratio * input_width)

new_h = int(ratio * input_high)

# 计算宽度和高度的差值,并确定padding的位置

dw = (dst_w - new_w) / 2

dh = (dst_h - new_h) / 2

top = int(round(dh - 0.1))

bottom = int(round(dh + 0.1))

left = int(round(dw - 0.1))

right = int(round(dw + 0.1))

return top, bottom, left, right

# 自定义手势关键点分类任务类

class HandKPClassApp(AIBase):

def __init__(self,kmodel_path,model_input_size,rgb888p_size=[1920,1080],display_size=[1920,1080],debug_mode=0):

super().__init__(kmodel_path,model_input_size,rgb888p_size,debug_mode)

# kmodel路径

self.kmodel_path=kmodel_path

# 手掌关键点模型输入分辨率

self.model_input_size=model_input_size

# sensor给到AI的图像分辨率,宽16字节对齐

self.rgb888p_size=[ALIGN_UP(rgb888p_size[0],16),rgb888p_size[1]]

# 视频输出VO分辨率,宽16字节对齐

self.display_size=[ALIGN_UP(display_size[0],16),display_size[1]]

# crop参数列表

self.crop_params=[]

# debug模式

self.debug_mode=debug_mode

# Ai2d实例,用于实现模型预处理

self.ai2d=Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT,nn.ai2d_format.NCHW_FMT,np.uint8, np.uint8)

# 配置预处理操作,这里使用了crop和resize,Ai2d支持crop/shift/pad/resize/affine,具体代码请打开/sdcard/app/libs/AI2D.py查看

def config_preprocess(self,det,input_image_size=None):

with ScopedTiming("set preprocess config",self.debug_mode > 0):

# 如果input_image_size为None,使用视频出图大小,否则按照自定义设置

ai2d_input_size=input_image_size if input_image_size else self.rgb888p_size

# 计算crop参数

self.crop_params = self.get_crop_param(det)

# 设置crop预处理过程

self.ai2d.crop(self.crop_params[0],self.crop_params[1],self.crop_params[2],self.crop_params[3])

# 设置resize预处理过程

self.ai2d.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

# build预处理过程,参数为输入tensor的shape和输出tensor的shape

self.ai2d.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.model_input_size[1],self.model_input_size[0]])

# 自定义后处理,results是模型输出的array列表

def postprocess(self,results):

with ScopedTiming("postprocess",self.debug_mode > 0):

results=results[0].reshape(results[0].shape[0]*results[0].shape[1])

results_show = np.zeros(results.shape,dtype=np.int16)

results_show[0::2] = results[0::2] * self.crop_params[3] + self.crop_params[0]

results_show[1::2] = results[1::2] * self.crop_params[2] + self.crop_params[1]

# 根据输出计算手势

gesture=self.hk_gesture(results_show)

return results_show,gesture

# 计算crop参数

def get_crop_param(self,det_box):

x1, y1, x2, y2 = det_box[2],det_box[3],det_box[4],det_box[5]

w,h= int(x2 - x1),int(y2 - y1)

w_det = int(float(x2 - x1) * self.display_size[0] // self.rgb888p_size[0])

h_det = int(float(y2 - y1) * self.display_size[1] // self.rgb888p_size[1])

x_det = int(x1*self.display_size[0] // self.rgb888p_size[0])

y_det = int(y1*self.display_size[1] // self.rgb888p_size[1])

length = max(w, h)/2

cx = (x1+x2)/2

cy = (y1+y2)/2

ratio_num = 1.26*length

x1_kp = int(max(0,cx-ratio_num))

y1_kp = int(max(0,cy-ratio_num))

x2_kp = int(min(self.rgb888p_size[0]-1, cx+ratio_num))

y2_kp = int(min(self.rgb888p_size[1]-1, cy+ratio_num))

w_kp = int(x2_kp - x1_kp + 1)

h_kp = int(y2_kp - y1_kp + 1)

return [x1_kp, y1_kp, w_kp, h_kp]

# 求两个vector之间的夹角

def hk_vector_2d_angle(self,v1,v2):

with ScopedTiming("hk_vector_2d_angle",self.debug_mode > 0):

v1_x,v1_y,v2_x,v2_y = v1[0],v1[1],v2[0],v2[1]

v1_norm = np.sqrt(v1_x * v1_x+ v1_y * v1_y)

v2_norm = np.sqrt(v2_x * v2_x + v2_y * v2_y)

dot_product = v1_x * v2_x + v1_y * v2_y

cos_angle = dot_product/(v1_norm*v2_norm)

angle = np.acos(cos_angle)*180/np.pi

return angle

# 根据手掌关键点检测结果判断手势类别

def hk_gesture(self,results):

with ScopedTiming("hk_gesture",self.debug_mode > 0):

angle_list = []

for i in range(5):

angle = self.hk_vector_2d_angle([(results[0]-results[i*8+4]), (results[1]-results[i*8+5])],[(results[i*8+6]-results[i*8+8]),(results[i*8+7]-results[i*8+9])])

angle_list.append(angle)

thr_angle,thr_angle_thumb,thr_angle_s,gesture_str = 65.,53.,49.,None

if 65535. not in angle_list:

if (angle_list[0]>thr_angle_thumb) and (angle_list[1]>thr_angle) and (angle_list[2]>thr_angle) and (angle_list[3]>thr_angle) and (angle_list[4]>thr_angle):

gesture_str = "fist"

elif (angle_list[0]<thr_angle_s) and (angle_list[1]<thr_angle_s) and (angle_list[2]<thr_angle_s) and (angle_list[3]<thr_angle_s) and (angle_list[4]<thr_angle_s):

gesture_str = "five"

elif (angle_list[0]<thr_angle_s) and (angle_list[1]<thr_angle_s) and (angle_list[2]>thr_angle) and (angle_list[3]>thr_angle) and (angle_list[4]>thr_angle):

gesture_str = "gun"

elif (angle_list[0]<thr_angle_s) and (angle_list[1]<thr_angle_s) and (angle_list[2]>thr_angle) and (angle_list[3]>thr_angle) and (angle_list[4]<thr_angle_s):

gesture_str = "love"

elif (angle_list[0]>5) and (angle_list[1]<thr_angle_s) and (angle_list[2]>thr_angle) and (angle_list[3]>thr_angle) and (angle_list[4]>thr_angle):

gesture_str = "one"

elif (angle_list[0]<thr_angle_s) and (angle_list[1]>thr_angle) and (angle_list[2]>thr_angle) and (angle_list[3]>thr_angle) and (angle_list[4]<thr_angle_s):

gesture_str = "six"

elif (angle_list[0]>thr_angle_thumb) and (angle_list[1]<thr_angle_s) and (angle_list[2]<thr_angle_s) and (angle_list[3]<thr_angle_s) and (angle_list[4]>thr_angle):

gesture_str = "three"

elif (angle_list[0]<thr_angle_s) and (angle_list[1]>thr_angle) and (angle_list[2]>thr_angle) and (angle_list[3]>thr_angle) and (angle_list[4]>thr_angle):

gesture_str = "thumbUp"

elif (angle_list[0]>thr_angle_thumb) and (angle_list[1]<thr_angle_s) and (angle_list[2]<thr_angle_s) and (angle_list[3]>thr_angle) and (angle_list[4]>thr_angle):

gesture_str = "yeah"

return gesture_str

# 自定义动态手势识别任务类

class DynamicGestureApp(AIBase):

def __init__(self,kmodel_path,model_input_size,rgb888p_size=[1920,1080],display_size=[1920,1080],debug_mode=0):

super().__init__(kmodel_path,model_input_size,rgb888p_size,debug_mode)

# kmodel路径

self.kmodel_path=kmodel_path

# 检测模型输入分辨率

self.model_input_size=model_input_size

# sensor给到AI的图像分辨率,宽16字节对齐

self.rgb888p_size=[ALIGN_UP(rgb888p_size[0],16),rgb888p_size[1]]

# 视频输出VO分辨率,宽16字节对齐

self.display_size=[ALIGN_UP(display_size[0],16),display_size[1]]

# debug模式

self.debug_mode=debug_mode

# 注意:ai2d设置多个预处理时执行的顺序为:crop->shift->resize/affine->pad,如果不符合该顺序,需要配置多个ai2d对象;

# 如下模型预处理要先做resize再做crop,因此要配置两个Ai2d对象

self.ai2d_resize=Ai2d(debug_mode)

self.ai2d_resize.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT,nn.ai2d_format.NCHW_FMT,np.uint8, np.uint8)

self.ai2d_crop=Ai2d(debug_mode)

self.ai2d_crop.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT,nn.ai2d_format.NCHW_FMT,np.uint8, np.uint8)

# 动态手势识别模型输入tensors列表

self.input_tensors=[]

# 动态手势识别模型的输入tensor的shape

self.gesture_kmodel_input_shape = [[1, 3, 224, 224], # 动态手势识别kmodel输入分辨率

[1,3,56,56],

[1,4,28,28],

[1,4,28,28],

[1,8,14,14],

[1,8,14,14],

[1,8,14,14],

[1,12,14,14],

[1,12,14,14],

[1,20,7,7],

[1,20,7,7]]

# 预处理参数

self.resize_shape = 256

self.mean_values = np.array([0.485, 0.456, 0.406]).reshape((3,1,1)) # 动态手势识别预处理均值

self.std_values = np.array([0.229, 0.224, 0.225]).reshape((3,1,1)) # 动态手势识别预处理方差

self.first_data=None

self.max_hist_len=20

self.crop_params=self.get_crop_param()

# 配置预处理

def config_preprocess(self,input_image_size=None):

with ScopedTiming("set preprocess config",self.debug_mode > 0):

# 初始化ai2d预处理配置

ai2d_input_size=input_image_size if input_image_size else self.rgb888p_size

# 配置resize和crop预处理

self.ai2d_resize.resize(nn.interp_method.tf_bilinear, nn.interp_mode.half_pixel)

self.ai2d_resize.build([1,3,ai2d_input_size[1],ai2d_input_size[0]],[1,3,self.crop_params[1],self.crop_params[0]])

self.ai2d_crop.crop(self.crop_params[2],self.crop_params[3],self.crop_params[4],self.crop_params[5])

self.ai2d_crop.build([1,3,self.crop_params[1],self.crop_params[0]],[1,3,self.model_input_size[1],self.model_input_size[0]])

# 初始化动态手势识别模型输入列表

inputs_num=self.get_kmodel_inputs_num()

self.first_data = np.ones(self.gesture_kmodel_input_shape[0], dtype=np.float)

for i in range(inputs_num):

data = np.zeros(self.gesture_kmodel_input_shape[i], dtype=np.float)

self.input_tensors.append(nn.from_numpy(data))

# 重写预处理,因为该部分不是单纯的走一个ai2d做预处理,所以该函数需要重写

def preprocess(self,input_np):

# 先走resize,再走crop

resize_tensor=self.ai2d_resize.run(input_np)

crop_output_tensor=self.ai2d_crop.run(resize_tensor.to_numpy())

ai2d_output = crop_output_tensor.to_numpy()

self.first_data[0] = ai2d_output[0].copy()

self.first_data[0] = (self.first_data[0]*1.0/255 -self.mean_values)/self.std_values

self.input_tensors[0]=nn.from_numpy(self.first_data)

return

# run函数重写

def run(self,input_np,his_logit,history):

# 预处理

self.preprocess(input_np)

# 推理

outputs=self.inference(self.input_tensors)

# 使用当前帧的输出更新下一帧的输入列表

outputs_num=self.get_kmodel_outputs_num()

for i in range(1,outputs_num):

self.input_tensors[i]=nn.from_numpy(outputs[i])

# 返回后处理结果

return self.postprocess(outputs,his_logit,history)

# 自定义后处理

def postprocess(self,results,his_logit, history):

with ScopedTiming("postprocess",self.debug_mode > 0):

his_logit.append(results[0])

avg_logit = sum(np.array(his_logit))

idx_ = np.argmax(avg_logit)

idx = self.gesture_process_output(idx_, history)

if (idx_ != idx):

his_logit_last = his_logit[-1]

his_logit = []

his_logit.append(his_logit_last)

return idx, avg_logit

# 手势处理函数

def gesture_process_output(self,pred,history):

if (pred == 7 or pred == 8 or pred == 21 or pred == 22 or pred == 3 ):

pred = history[-1]

if (pred == 0 or pred == 4 or pred == 6 or pred == 9 or pred == 14 or pred == 1 or pred == 19 or pred == 20 or pred == 23 or pred == 24) :

pred = history[-1]

if (pred == 0) :

pred = 2

if (pred != history[-1]) :

if (len(history)>= 2) :

if (history[-1] != history[len(history)-2]) :

pred = history[-1]

history.append(pred)

if (len(history) > self.max_hist_len) :

history = history[-self.max_hist_len:]

return history[-1]

# 计算crop参数

def get_crop_param(self):

ori_w = self.rgb888p_size[0]

ori_h = self.rgb888p_size[1]

width = self.model_input_size[0]

height = self.model_input_size[1]

ratiow = float(self.resize_shape) / ori_w

ratioh = float(self.resize_shape) / ori_h

if ratiow < ratioh:

ratio = ratioh

else:

ratio = ratiow

new_w = int(ratio * ori_w)

new_h = int(ratio * ori_h)

top = int((new_h-height)/2)

left = int((new_w-width)/2)

return new_w,new_h,left,top,width,height

# 重写逆初始化

def deinit(self):

with ScopedTiming("deinit",self.debug_mode > 0):

del self.kpu

del self.ai2d_resize

del self.ai2d_crop

self.tensors.clear()

del self.tensors

gc.collect()

nn.shrink_memory_pool()

os.exitpoint(os.EXITPOINT_ENABLE_SLEEP)

time.sleep_ms(100)

# 自定义动态手势识别任务

class DynamicGesture:

def __init__(self,hand_det_kmodel,hand_kp_kmodel,gesture_kmodel,det_input_size,kp_input_size,gesture_input_size,labels,anchors,confidence_threshold=0.25,nms_threshold=0.3,nms_option=False,strides=[8,16,32],rgb888p_size=[1280,720],display_size=[1920,1080],debug_mode=0):

# 手掌检测模型路径

self.hand_det_kmodel=hand_det_kmodel

# 手掌关键点模型路径

self.hand_kp_kmodel=hand_kp_kmodel

# 动态手势识别路径

self.gesture_kmodel=gesture_kmodel

# 手掌检测模型输入分辨率

self.det_input_size=det_input_size

# 手掌关键点模型输入分辨率

self.kp_input_size=kp_input_size

# 动态手势识别模型输入分辨率

self.gesture_input_size=gesture_input_size

self.labels=labels

# anchors

self.anchors=anchors

# 置信度阈值

self.confidence_threshold=confidence_threshold

# nms阈值

self.nms_threshold=nms_threshold

self.nms_option=nms_option

self.strides=strides

# sensor给到AI的图像分辨率,宽16字节对齐

self.rgb888p_size=[ALIGN_UP(rgb888p_size[0],16),rgb888p_size[1]]

# 视频输出VO分辨率,宽16字节对齐

self.display_size=[ALIGN_UP(display_size[0],16),display_size[1]]

# 动态手势识别贴图

self.bin_width = 150 # 动态手势识别屏幕坐上角标志状态文件的短边尺寸

self.bin_height = 216 # 动态手势识别屏幕坐上角标志状态文件的长边尺寸

shang_argb = np.fromfile("/sdcard/app/tests/utils/shang.bin", dtype=np.uint8)

self.shang_argb = shang_argb.reshape((self.bin_height, self.bin_width, 4))

xia_argb = np.fromfile("/sdcard/app/tests/utils/xia.bin", dtype=np.uint8)

self.xia_argb = xia_argb.reshape((self.bin_height, self.bin_width, 4))

zuo_argb = np.fromfile("/sdcard/app/tests/utils/zuo.bin", dtype=np.uint8)

self.zuo_argb = zuo_argb.reshape((self.bin_width, self.bin_height, 4))

you_argb = np.fromfile("/sdcard/app/tests/utils/you.bin", dtype=np.uint8)

self.you_argb = you_argb.reshape((self.bin_width, self.bin_height, 4))

#其他参数

self.TRIGGER = 0 # 动态手势识别应用的结果状态

self.MIDDLE = 1

self.UP = 2

self.DOWN = 3

self.LEFT = 4

self.RIGHT = 5

self.max_hist_len = 20 # 最多存储多少帧的结果

# debug_mode模式

self.debug_mode=debug_mode

self.cur_state = self.TRIGGER

self.pre_state = self.TRIGGER

self.draw_state = self.TRIGGER

self.vec_flag = []

self.his_logit = []

self.history = [2]

self.s_start = time.time_ns()

self.m_start=None

self.hand_det=HandDetApp(self.hand_det_kmodel,self.labels,model_input_size=self.det_input_size,anchors=self.anchors,confidence_threshold=self.confidence_threshold,nms_threshold=self.nms_threshold,nms_option=self.nms_option,strides=self.strides,rgb888p_size=self.rgb888p_size,display_size=self.display_size,debug_mode=0)

self.hand_kp=HandKPClassApp(self.hand_kp_kmodel,model_input_size=self.kp_input_size,rgb888p_size=self.rgb888p_size,display_size=self.display_size)

self.dg=DynamicGestureApp(self.gesture_kmodel,model_input_size=self.gesture_input_size,rgb888p_size=self.rgb888p_size,display_size=self.display_size)

self.hand_det.config_preprocess()

self.dg.config_preprocess()

# run函数

def run(self,input_np):

if self.cur_state == self.TRIGGER:

# 手掌检测

det_boxes=self.hand_det.run(input_np)

boxes=[]

gesture_res=[]

for det_box in det_boxes:

# 筛选检测框

x1, y1, x2, y2 = det_box[2],det_box[3],det_box[4],det_box[5]

w,h= int(x2 - x1),int(y2 - y1)

if (h<(0.1*self.rgb888p_size[1])):

continue

if (w<(0.25*self.rgb888p_size[0]) and ((x1<(0.03*self.rgb888p_size[0])) or (x2>(0.97*self.rgb888p_size[0])))):

continue

if (w<(0.15*self.rgb888p_size[0]) and ((x1<(0.01*self.rgb888p_size[0])) or (x2>(0.99*self.rgb888p_size[0])))):

continue

# 手掌关键点预处理配置

self.hand_kp.config_preprocess(det_box)

# 手掌关键点检测

hk_results,gesture_str=self.hand_kp.run(input_np)

boxes.append(det_box)

gesture_res.append((hk_results,gesture_str))

return boxes,gesture_res

else:

# 动态手势识别

idx, avg_logit = self.dg.run(input_np, self.his_logit, self.history)

return idx,avg_logit

# 根据输出结果绘制效果

def draw_result(self,pl,output1,output2):

pl.osd_img.clear()

draw_img_np = np.zeros((self.display_size[1],self.display_size[0],4),dtype=np.uint8)

draw_img=image.Image(self.display_size[0], self.display_size[1], image.ARGB8888,alloc=image.ALLOC_REF,data=draw_img_np)

if self.cur_state == self.TRIGGER:

for i in range(len(output1)):

hk_results,gesture=output2[i][0],output2[i][1]

if ((gesture == "five") or (gesture == "yeah")):

v_x = hk_results[24]-hk_results[0]

v_y = hk_results[25]-hk_results[1]

angle = self.hand_kp.hk_vector_2d_angle([v_x,v_y],[1.0,0.0])

if (v_y>0):

angle = 360-angle

if ((70.0<=angle) and (angle<110.0)):

if ((self.pre_state != self.UP) or (self.pre_state != self.MIDDLE)):

self.vec_flag.append(self.pre_state)

if ((len(self.vec_flag)>10)or(self.pre_state == self.UP) or (self.pre_state == self.MIDDLE) or(self.pre_state == self.TRIGGER)):

draw_img_np[:self.bin_height,:self.bin_width,:] = self.shang_argb

self.cur_state = self.UP

elif ((110.0<=angle) and (angle<225.0)): # 手指向右(实际方向)

if (self.pre_state != self.RIGHT):

self.vec_flag.append(self.pre_state)

if ((len(self.vec_flag)>10)or(self.pre_state == self.RIGHT)or(self.pre_state == self.TRIGGER)):

draw_img_np[:self.bin_width,:self.bin_height,:] = self.you_argb

self.cur_state = self.RIGHT

elif((225.0<=angle) and (angle<315.0)): # 手指向下

if (self.pre_state != self.DOWN):

self.vec_flag.append(self.pre_state)

if ((len(self.vec_flag)>10)or(self.pre_state == self.DOWN)or(self.pre_state == self.TRIGGER)):

draw_img_np[:self.bin_height,:self.bin_width,:] = self.xia_argb

self.cur_state = self.DOWN

else: # 手指向左(实际方向)

if (self.pre_state != self.LEFT):

self.vec_flag.append(self.pre_state)

if ((len(self.vec_flag)>10)or(self.pre_state == self.LEFT)or(self.pre_state == self.TRIGGER)):

draw_img_np[:self.bin_width,:self.bin_height,:] = self.zuo_argb

self.cur_state = self.LEFT

self.m_start = time.time_ns()

self.his_logit = []

else:

idx,avg_logit=output1,output2[0]

if (self.cur_state == self.UP):

draw_img_np[:self.bin_height,:self.bin_width,:] = self.shang_argb

if ((idx==15) or (idx==10)):

self.vec_flag.clear()

if (((avg_logit[idx] >= 0.7) and (len(self.his_logit) >= 2)) or ((avg_logit[idx] >= 0.3) and (len(self.his_logit) >= 4))):

self.s_start = time.time_ns()

self.cur_state = self.TRIGGER

self.draw_state = self.DOWN

self.history = [2]

self.pre_state = self.UP

elif ((idx==25)or(idx==26)) :

self.vec_flag.clear()

if (((avg_logit[idx] >= 0.4) and (len(self.his_logit) >= 2)) or ((avg_logit[idx] >= 0.3) and (len(self.his_logit) >= 3))):

self.s_start = time.time_ns()

self.cur_state = self.TRIGGER

self.draw_state = self.MIDDLE

self.history = [2]

self.pre_state = self.MIDDLE

else:

self.his_logit.clear()

elif (self.cur_state == self.RIGHT):

draw_img_np[:self.bin_width,:self.bin_height,:] = self.you_argb

if ((idx==16)or(idx==11)) :

self.vec_flag.clear()

if (((avg_logit[idx] >= 0.4) and (len(self.his_logit) >= 2)) or ((avg_logit[idx] >= 0.3) and (len(self.his_logit) >= 3))):

self.s_start = time.time_ns()

self.cur_state = self.TRIGGER

self.draw_state = self.RIGHT

self.history = [2]

self.pre_state = self.RIGHT

else:

self.his_logit.clear()

elif (self.cur_state == self.DOWN):

draw_img_np[:self.bin_height,:self.bin_width,:] = self.xia_argb

if ((idx==18)or(idx==13)):

self.vec_flag.clear()

if (((avg_logit[idx] >= 0.4) and (len(self.his_logit) >= 2)) or ((avg_logit[idx] >= 0.3) and (len(self.his_logit) >= 3))):

self.s_start = time.time_ns()

self.cur_state = self.TRIGGER

self.draw_state = self.UP

self.history = [2]

self.pre_state = self.DOWN

else:

self.his_logit.clear()

elif (self.cur_state == self.LEFT):

draw_img_np[:self.bin_width,:self.bin_height,:] = self.zuo_argb

if ((idx==17)or(idx==12)):

self.vec_flag.clear()

if (((avg_logit[idx] >= 0.4) and (len(self.his_logit) >= 2)) or ((avg_logit[idx] >= 0.3) and (len(self.his_logit) >= 3))):

self.s_start = time.time_ns()

self.cur_state = self.TRIGGER

self.draw_state = self.LEFT

self.history = [2]

self.pre_state = self.LEFT

else:

self.his_logit.clear()

self.elapsed_time = round((time.time_ns() - self.m_start)/1000000)

if ((self.cur_state != self.TRIGGER) and (self.elapsed_time>2000)):

self.cur_state = self.TRIGGER

self.pre_state = self.TRIGGER

self.elapsed_ms_show = round((time.time_ns()-self.s_start)/1000000)

if (self.elapsed_ms_show<1000):

if (self.draw_state == self.UP):

draw_img.draw_arrow(1068,330,1068,130, (255,170,190,230), thickness=13) # 判断为向上挥动时,画一个向上的箭头

draw_img.draw_string_advanced(self.display_size[0]//2-50,self.display_size[1]//2-50,32,"向上")

elif (self.draw_state == self.RIGHT):

draw_img.draw_arrow(1290,540,1536,540, (255,170,190,230), thickness=13) # 判断为向右挥动时,画一个向右的箭头

draw_img.draw_string_advanced(self.display_size[0]//2-50,self.display_size[1]//2-50,32,"向右")

elif (self.draw_state == self.DOWN):

draw_img.draw_arrow(1068,750,1068,950, (255,170,190,230), thickness=13) # 判断为向下挥动时,画一个向下的箭头

draw_img.draw_string_advanced(self.display_size[0]//2-50,self.display_size[1]//2-50,32,"向下")

elif (self.draw_state == self.LEFT):

draw_img.draw_arrow(846,540,600,540, (255,170,190,230), thickness=13) # 判断为向左挥动时,画一个向左的箭头

draw_img.draw_string_advanced(self.display_size[0]//2-50,self.display_size[1]//2-50,32,"向左")

elif (self.draw_state == self.MIDDLE):

draw_img.draw_circle(1068,540,100, (255,170,190,230), thickness=2, fill=True) # 判断为五指捏合手势时,画一个实心圆

draw_img.draw_string_advanced(self.display_size[0]//2-50,self.display_size[1]//2-50,32,"中间")

else:

self.draw_state = self.TRIGGER

pl.osd_img.copy_from(draw_img)

if __name__=="__main__":

# 显示模式,默认"hdmi",可以选择"hdmi"和"lcd"

display_mode="hdmi"

if display_mode=="hdmi":

display_size=[1920,1080]

else:

display_size=[800,480]

# 手掌检测模型路径

hand_det_kmodel_path="/sdcard/app/tests/kmodel/hand_det.kmodel"

# 手部关键点模型路径

hand_kp_kmodel_path="/sdcard/app/tests/kmodel/handkp_det.kmodel"

# 动态手势识别模型路径

gesture_kmodel_path="/sdcard/app/tests/kmodel/gesture.kmodel"

# 其他参数

rgb888p_size=[1920,1080]

hand_det_input_size=[512,512]

hand_kp_input_size=[256,256]

gesture_input_size=[224,224]

confidence_threshold=0.2

nms_threshold=0.5

labels=["hand"]

anchors = [26,27, 53,52, 75,71, 80,99, 106,82, 99,134, 140,113, 161,172, 245,276]

# 初始化PipeLine,只关注传给AI的图像分辨率,显示的分辨率

pl=PipeLine(rgb888p_size=rgb888p_size,display_size=display_size,display_mode=display_mode)

pl.create()

# 自定义动态手势识别任务实例

dg=DynamicGesture(hand_det_kmodel_path,hand_kp_kmodel_path,gesture_kmodel_path,det_input_size=hand_det_input_size,kp_input_size=hand_kp_input_size,gesture_input_size=gesture_input_size,labels=labels,anchors=anchors,confidence_threshold=confidence_threshold,nms_threshold=nms_threshold,nms_option=False,strides=[8,16,32],rgb888p_size=rgb888p_size,display_size=display_size)

try:

while True:

os.exitpoint()

with ScopedTiming("total",1):

img=pl.get_frame() # 获取当前帧

output1,output2=dg.run(img) # 推理当前帧

dg.draw_result(pl,output1,output2) # 绘制推理结果

pl.show_image() # 展示推理结果

gc.collect()

except Exception as e:

sys.print_exception(e)

finally:

dg.hand_det.deinit()

dg.hand_kp.deinit()

dg.dg.deinit()

pl.destroy()

2.2. 注视估计

from libs.PipeLine import PipeLine, ScopedTiming

from libs.AIBase import AIBase

from libs.AI2D import Ai2d

import os

import ujson

from media.media import *

from time import *

import nncase_runtime as nn

import ulab.numpy as np

import time

import image

import aidemo

import random

import gc

import sys

import math

# 自定义人脸检测任务类

class FaceDetApp(AIBase):

def __init__(self,kmodel_path,model_input_size,anchors,confidence_threshold=0.25,nms_threshold=0.3,rgb888p_size=[1280,720],display_size=[1920,1080],debug_mode=0):

super().__init__(kmodel_path,model_input_size,rgb888p_size,debug_mode)

# kmodel路径

self.kmodel_path=kmodel_path

# 检测模型输入分辨率

self.model_input_size=model_input_size

# 置信度阈值

self.confidence_threshold=confidence_threshold

# nms阈值

self.nms_threshold=nms_threshold

self.anchors=anchors

# sensor给到AI的图像分辨率,宽16字节对齐

self.rgb888p_size=[ALIGN_UP(rgb888p_size[0],16),rgb888p_size[1]]

# 视频输出VO分辨率,宽16字节对齐

self.display_size=[ALIGN_UP(display_size[0],16),display_size[1]]

# debug模式

self.debug_mode=debug_mode

# Ai2d实例,用于实现模型预处理

self.ai2d=Ai2d(debug_mode)

# 设置Ai2d的输入输出格式和类型

self.ai2d.set_ai2d_dtype(nn.ai2d_format.NCHW_FMT,nn.ai2d_format.NCHW_FMT,np.uint8, np.uint8)

# 配置预处理操作,这里使用了padding和resize,Ai2d支持crop/shift/pad/resize/affine,具体代码请打开/sdcard/app/libs/AI2D.py查看

def config_preprocess(self,input_image_size=None):

with ScopedTiming("set preprocess config",self.debug_mode > 0):